This is a fairly straight forward website in that it’s a static hugo generated website. The site is hosted on Azure storage and pumped into your browser via Microsoft’s global CDN.

All traffic is encrypted by default.

The hosting platform’s environments like dev, stg,

and the beloved prod are built with Pulumi IaC code

written in Go.

The code creates a unique Resource Group per environment with all necessary

functionality to host the site including DNS records and in non-prod environments,

a TLS certificate that’s auto-rotated by the Azure platform. Due to Azure limitations on root domain auto-TLS provisioning,

the prod environment uses a regular Comodo-sourced certificate. They would prefer users migrate to the more expensive

Front Door service, which provides auto-rotating TLS certificates on root domains (as of q4 2023).

All secrets encrypted at rest

with SOPS and stored in Git or Pulumi Cloud (as state.) Pulumi generated secrets from Azure

are shipped into GitHub’s Secrets provider and accessible per-environment e.g.: dev, stg.

These secrets are used by CI/CD Actions

invoked by a simple caller workflow from the hugo site’s repo.

A PR opened in the hugo site’s repo will automatically trigger a build -> deploy to

dev.elyclover.com

All secrets are auto-provisioned into their necessary locations this way with no user intervention required. A DevOps/SRE role will not need to touch keys, rotate them, or share them in a shared browser-based password vault with your team. The Pulumi IaC code can be used to rotate these credentials within the CI/CD system automatically. This also provides DR capabilities and eases Change Management concerns out of the box.

Another action named Release Please

then sweeps all PRs with Conventional Commits

parseable titles into Release PRs and

auto-generates release notes summarizing what’s in this release. Each release PR

is also assigned a unique semantic version like v2.1.2. This semantic version

is based on the size and risk of changes using simple PR title prefixes like feat or fix.

Every time an additional PR like this is merged to main, it is auto-added to the Release rollup and

we re-trigger a build and deploy of the site to stg.elyclover.com.

The purpose here is to see all individual PRs with their own agendas merged and co-existing

in a single environment before release to prod.

Finally, when these release PRs are merged into main, a full GitHub Release is triggered

along with a corresponding tag in git referencing the semantic version for this release.

This triggers a build + deploy to elyclover.com which is the production environment

in this case.

This GitOps flow is possible due to a small workflow that invokes reusable Actions I’ve written and released with an Open Source license. Here’s how they are invoked from the repo holding our hugo site code. Three Jobs run sequentially and handle the entire operation.

name: hugo-cicd

on:

pull_request:

types: [ synchronize, opened ]

release:

types:

- released

defaults:

run:

shell: bash

concurrency: cicd-v2

jobs:

# determine what kind of event triggered us, what framework we'll ultimately build with

gen-metadata:

runs-on: ubuntu-latest

outputs:

build-id: ${{ steps.build-metadata.outputs.artifact-id }}

build-domain: ${{ steps.build-metadata.outputs.composite-domain }}

env-target: ${{ steps.build-metadata.outputs.env-target }}

hugo-version: ${{ steps.build-metadata.outputs.hugo-version }}

steps:

- name: generate build metadata

id: build-metadata

uses: kevholmes/hugo-azure-actions/.github/actions/generate-metadata@v1

with:

hugo-version: 0.119.0

site-base-tld: elyclover.com

# compile the hugo site and save it as an artifact, use metadata generated earlier for context

build:

runs-on: ubuntu-latest

needs: gen-metadata

environment:

name: ${{ needs.gen-metadata.outputs.env-target }}

url: ${{ needs.gen-metadata.outputs.build-domain }}

steps:

- name: checkout source

uses: actions/checkout@v4

with:

fetch-depth: 0

- name: build hugo site

uses: kevholmes/hugo-azure-actions/.github/actions/build@v1

with:

build-id: ${{ needs.gen-metadata.outputs.build-id }}

build-domain: ${{ needs.gen-metadata.outputs.build-domain }}

hugo-version: ${{ needs.gen-metadata.outputs.hugo-version }}

# deploys to azure blob storage container, secrets/params are by Actions Environment

# which is part of metadata gen process, clears CDN cache and clobbers all files

# in the blob container that conflict with the new release

deploy-azure:

runs-on: ubuntu-latest

needs: [gen-metadata, build]

environment:

name: ${{ needs.gen-metadata.outputs.env-target }}

url: ${{ needs.gen-metadata.outputs.build-domain }}

steps:

- name: deploy hugo site to azure

uses: kevholmes/hugo-azure-actions/.github/actions/deploy@v1

with:

build-id: ${{ needs.gen-metadata.outputs.build-id }}

az-client-id: ${{ vars.CLIENT_ID }}

az-client-secret: ${{ secrets.CLIENT_SECRET }}

az-subscription-id: ${{ vars.SUBSCRIPTION_ID }}

az-tenant-id: ${{ vars.TENANT_ID }}

az-storage-acct: ${{ vars.AZ_STORAGE_ACCT }}

az-cdn-profile-name: ${{ vars.AZ_CDN_PROFILE_NAME }}

az-cdn-endpoint: ${{ vars.AZ_CDN_ENDPOINT }}

az-resource-group: ${{ vars.AZ_RESOURCE_GROUP }}

The above workflow has a simple concurrency lock. It allows only one CI/CD workflow to execute at any time, no matter the target environment. It’s executed for Pull Requests and GitHub Release events (generated by Release Please PR merges into main.)

The overall goals of the three jobs are as follows:

- Generate metadata for this build - determine what environment we are deploying to

- Run the build and generate an artifact

- Deploy the build to the target environment determined in step 1

The CI/CD actor’s auth is tightly scoped with POLP to only allow a few operations on the Azure Storage Account necessary for deployments such as read/write/delete and clearing of the CDN cache on Microsoft’s side.

Each environment has its own Resource Group and Service Principal for CI/CD pipelines.

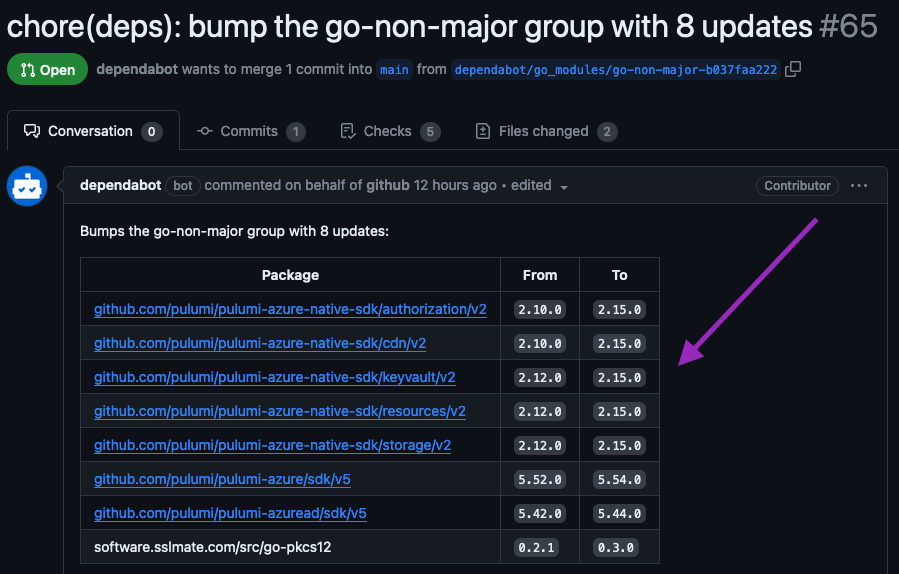

The Actions repo where the reusable website CI/CD workflows live has some

additional automation like Dependabot to ensure dependencies are kept up-to-date.

The same goes for the repo holding our Pulumi IaC. It has Dependabot updating

any Go and Actions dependencies for us on a weekly basis. I am using

a newer

Dependabot feature called groups that places our related dependency updates into a single PR

to reduce the amount of merge -> dependabot rebase PR -> merge work a developer needs

to undergo when keeping the project up-to-date.

I’ve configured it to group all minor and patch level updates (in regard to semantic versions) in a single PR, and leave the major version updates to their own unique Dependabot PRs.

I still have extra work on this project, including sorting out a more advanced concurrency scheme.

Another to-do item is GitOps automation tied to the Pulumi IaC repo. I want to tie a new PR to apply to

only the dev env Azure Resource Group, a Release Please PR with rollups of all individual changes for staging,

along with a Release event in GH to trigger an pulumi up or similar for prod. I have

the pulumi up process working idempotently about 99.99% of the time over the last month.

So, it seems ready for automation. There’s a Pulumi feature to deploy PRs to ephemeral environments that I could

utilize and skip the static dev.elyclover.com setup and move to some semi-unique-id.elyclover.com (maybe using the PR #?)

and then clean these ephemeral environments up every 48 hours to save on costs.

This effort could also tie into the concurrency work since we could build and deploy

multiple dev-level environments simultaneously.

I have found what appears to be a bug with the Azure native Storage and Azure legacy CDN provider interactions within Pulumi. This issue manifests as a CDN endpoint you can’t delete until the DNS CNAME records are gone - the providers have some issue with their combined DAG that doesn’t get the order right and delete the CNAME record before our CDN EP custom domain (at least that’s how it manifests.) This out-of-order issue throws an error and can break the automation when tearing environments down programmatically. Right now, I have to delete the CNAME record manually, refresh Pulumi’s state for that environment, and then continue with the automated destruction process via Pulumi.

If you have any questions or want to contribute, please reach out.

- Kevin